Available on Any Process in 6-8 Months: Enables Reconfigurable AI Matrix Multipliers of Any Size

MOUNTAIN VIEW, Calif., June 25, 2018 — (PRNewswire) — Flex Logix® Technologies, Inc., the leading supplier of embedded FPGA (eFPGA) IP, architecture and software, announced today a new member of the EFLXÒ4K eFPGA Core Family: the EFLX4K Artificial Intelligence (AI) eFPGA core. This new core has been specifically designed to enhance the performance of deep learning by 10X and enable more neural network processing per square millimeter.

Many companies are using FPGA to implement AI and more specifically machine learning, deep learning and neural networks as approaches to achieve AI. The key function needed for AI are matrix multipliers, which consist of arrays of MACs (multiplier accumulators). In existing FPGA and eFPGAs, the MACs are optimized for DSPs with larger multipliers, pre-adders and other logic which are overkill for AI. For AI applications, smaller multipliers such as 16 bits or 8 bits, with the ability to support both modes with accumulators, allow more neural network processing per square millimeter.

"We've had significant customer interest in using eFPGA for AI applications because of the performance advantages it can deliver in these chip design," said Geoff Tate, CEO and co-founder of Flex Logix. "In fact, one of the first customers Flex Logix announced was Harvard who chose our eFPGA for their deep learning design. Not only will they be presenting this chip at Hot Chips next month, but they are already working on a follow-on 16nm AI chip that will make more extensive use of the Flex Logix EFLX eFPGA."

AI customers want more MACs/second and more MACs/square millimeter, but they also want the flexibility of eFPGA to reconfigure designs as AI algorithms are changing rapidly. They require the ability to switch between 8 and 16 bit modes as needed and to implement matrix multipliers of varying sizes to meet their applications' performance and cost constraints.

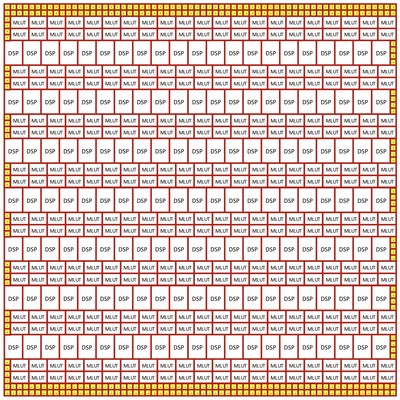

The EFLX4K AI eFPGA core leverages Flex Logix's patented XFLX™ interconnect for an area-efficient, reconfigurable AI solution. It uses a new AI-MAC architecture capable of implementing 8-bit MACs or 16-bit MACs reconfigurably (as well as 16x8 and 8x16). A single EFLX4K AI core in 16nm for example will be about 1.2 square millimeters with 441 8-bit MACs running at 1GHz for a throughput of 441 GigaMACs/second at worst case silicon conditions. The EFLX4K AI core can be arrayed up to at least a 7x7, enabling performance of ~22 TeraMacs/second in worst case silicon conditions.

The EFLX4K AI is footprint compatible with currently available, silicon proven EFLX4K cores to allow architects and designers the ultimate flexibility in RTL reconfigurability.

The EFLX4K AI eFPGA core is fully supported by Flex Logix's existing software flow using the EFLX Compiler.

As with any EFLX core, the EFLX4K AI eFPGA core can be implemented on any process in six to eight months. Flex Logix's unique eFPGA architecture (XFLX high density interconnect & ArrayLinx™ interconnect for tiling arrays of any size) enables much faster architectural innovation and iteration than traditional FPGA design approaches. A EFLX4K AI eFPGA core is ~1.2x the area of an EFLX4K Logic core (same width, ~1.2x height).

See us at DAC Booth #2318 this week to learn more or contact us at Email Contact.

About Flex Logix

Flex Logix, founded in March 2014, provides solutions for reconfigurable RTL in chip and system designs using embedded FPGA IP cores, architecture and software. eFPGA can accelerate key workloads 10-100x faster than processors, enable chips to adapt to changing algorithms, protocols, etc and to customize mask sets to meet the needs of multiple customers or market segments. Flex Logix has unique, patented technology for implementing eFPGA: XFLX hierarchical interconnect with twice the density of traditional FPGA mesh interconnect, ArrayLinx interconnect enabling arrays of various sizes and features to be constructed in days from silicon proven GDS blocks, and RAMLinx for integrating any kind of RAM the customer needs. Flex Logix's co-founders Cheng Wang and Dejan Markovic were recognized with the prestigious Outstanding Paper Award by ISSCC for their paper on XFLX technology. Flex Logix's other co-founder Geoff Tate was the founding CEO of Rambus, which pioneered the Semiconductor IP business model, taking the company from 4 employees to a $2 billion market capitalization. The company's technology platform delivers significant customer benefits by dramatically reducing design and manufacturing risks, accelerating technology roadmaps, and bringing greater flexibility to customers' hardware. Flex Logix has secured approximately $13 million of venture backed capital, is headquartered in Mountain View, California and has sales rep offices in China, Europe, Israel, Japan, Taiwan. More information can be obtained at

http://www.flex-logix.com or follow on Twitter at @efpga.

MEDIA CONTACTS

Kelly Karr

Tanis Communications

kelly.karr@taniscomm.com

+408-718-9350

Copyright 2018. All rights reserved. Flex Logix and EFLX are registered trademarks of Flex Logix, Inc.

![]() View original content with multimedia:

http://www.prnewswire.com/news-releases/flex-logix-improves-deep-learning-performance-by-10x-with-new-eflx4k-ai-efpga-core-300671327.html

View original content with multimedia:

http://www.prnewswire.com/news-releases/flex-logix-improves-deep-learning-performance-by-10x-with-new-eflx4k-ai-efpga-core-300671327.html

SOURCE Flex Logix Technologies, Inc.

| Contact: |

| Company Name: Flex Logix Technologies, Inc.

Web: http://www.flex-logix.com |